Introduction to GPU and Machine Learning

Graphics Processing Units (GPUs) have become indispensable in machine learning (ML). Originally, GPUs were designed for rendering graphics in video games. However, their ability to perform parallel calculations makes them ideal for ML tasks. GPUs handle large blocks of data and complex algorithms more efficiently than traditional CPUs. As a result, they accelerate the training and execution of machine learning models.

Machine learning involves teaching computers to learn from data. It includes tasks like image recognition, natural language processing, and predictive analytics. These tasks require massive computational power. This is where GPUs come in. They handle the intense workloads needed for training models. With their many cores, GPUs carry out simultaneous operations, reducing processing time.

When you search for the ‘best gpu for machine learning’, you look for speed, efficiency, and reliability. The right GPU ensures that your ML projects complete swiftly and accurately. As we move forward into 2025, the demands on GPUs in this field only grow. End-users expect faster and more complex computations. Manufacturers respond with more advanced GPUs tailored for ML. In this blog, we will navigate the factors that influence the GPU choice for your machine learning needs.

Top Factors to Consider When Selecting a GPU for ML

Choosing the best GPU for machine learning involves several critical factors. Let’s delve into these key considerations to ensure you make an informed decision.

Compatibility with Machine Learning Frameworks

When selecting a GPU, first ensure compatibility with popular ML frameworks. Frameworks like TensorFlow, PyTorch, and others should support your GPU choice. This compatibility is crucial for smooth operation and performance optimization. Check the framework’s documentation for GPU recommendations.

Performance Metrics for Machine Learning Tasks

Evaluate GPUs based on specific performance metrics. Look into their processing speed, core counts, and clock speeds. These factors influence how quickly a GPU can process ML tasks. Aim for GPUs with high teraflops performance, as this relates to their computational capability.

Memory and Bandwidth Requirements

For ML, you need ample memory and high bandwidth. These determine how much data a GPU can process at a time. More memory allows for larger datasets to be used without compromising speed. High bandwidth ensures that data moves quickly between the GPU and system memory.

Power Efficiency and Thermal Design

Lastly, consider the GPU’s power consumption and cooling system. Efficient GPUs consume less energy, saving on electricity costs. A good thermal design prevents overheating, maintaining performance and extending the GPU’s lifespan. Select GPUs with lower thermal design power (TDP) values and robust cooling solutions.

Leading GPU Models for Machine Learning in 2025

With 2025 upon us, a slew of GPUs stands out in the field of machine learning. Identifying the best GPU for machine learning is pivotal for achieving top-notch performance. In this section, we’ll highlight some leading models that have earned their stripes.

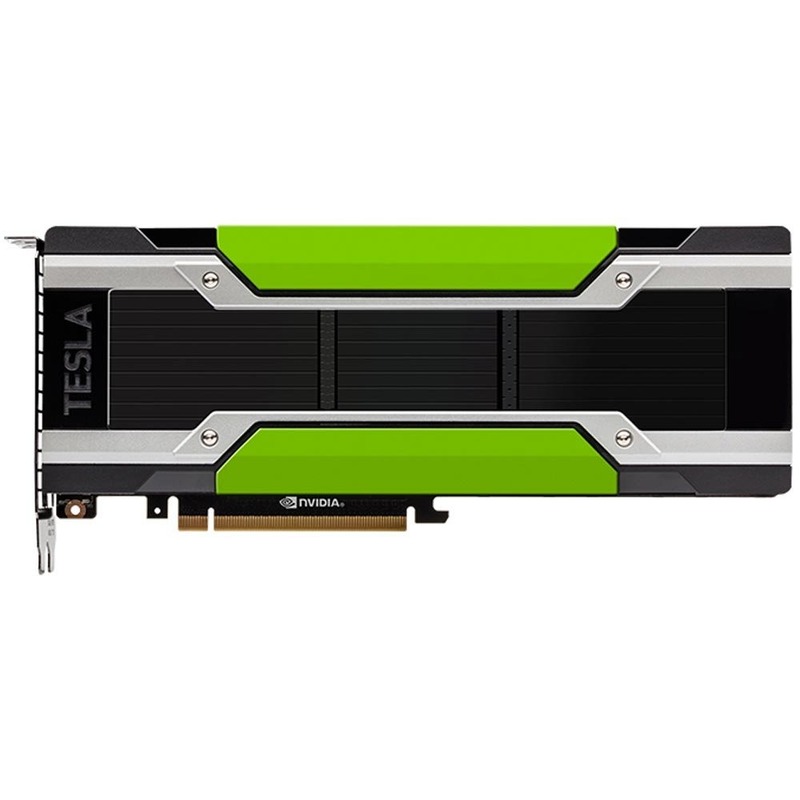

NVIDIA’s ‘Ampere’ Series

NVIDIA continues to be a frontrunner with its ‘Ampere’ GPUs, boasting raw power and efficiency. These GPUs feature AI-specific cores that optimize machine learning workflows. Their robust memory architecture supports large datasets, a must-have for complex ML tasks.

AMD’s ‘Instinct’ Range

AMD isn’t far behind with its ‘Instinct’ series. Designed for high-performance computing, these GPUs offer impressive parallel processing capabilities. They are tailored to handle intricate calculations swiftly, thus accelerating ML model training times.

Intel’s Xe Graphics Line

Intel has made leaps with its Xe graphics cards. Their versatile architecture handles various ML workloads with ease. Integrating these GPUs offers both power and adaptability for diverse machine learning applications.

Innovative Startups

Several startups are stepping up, challenging the incumbents. New players are crafting GPUs that are specific to machine learning needs, with high throughput and low latency.

Each model comes with unique strengths. Think about your specific machine learning requirements when choosing the right GPU. Whether it’s speed, memory, or power efficiency, these 2025 GPUs are at the forefront of machine learning innovation.

Benchmarks and Performance Tests

To gauge the actual performance of GPUs for machine learning, benchmarks and performance tests are crucial. These tools provide quantifiable data, demonstrating how a GPU handles different machine learning tasks. When conducting these tests, it’s important to look for specific characteristics that match your ML workloads.

Real-World Machine Learning Workload Scenarios

For a realistic view, assess GPUs against real-world scenarios. These may include image recognition tasks, big data analytics, or natural language processing workloads. Choose scenarios that reflect your typical use cases. Monitor metrics like processing time and accuracy. A GPU might excel in speed but fall short in delivering precise results. Real-world tests reveal such trade-offs.

Industry Standard Benchmarking Suites

Industry benchmarks like MLPerf offer a standardized suite of tests. These benchmarks evaluate GPUs on common tasks such as training deep learning models. They consider factors like power draw and compute throughput. Comparing GPUs with industry benchmarks helps ensure you select a GPU that meets established performance criteria. Look for the latest results to get up-to-date insights on the best gpu for machine learning.

GPU Scalability and Multi-GPU Setups

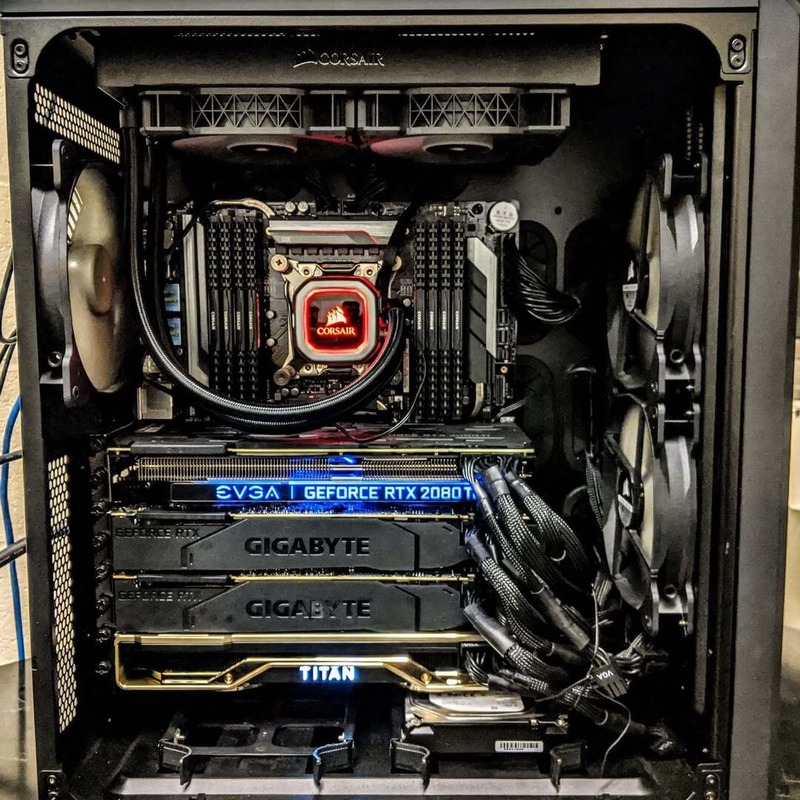

When selecting the best GPU for machine learning, scalability is vital. A scalable GPU system can adapt as your ML workloads grow. In 2025, ML projects are more demanding. They often require additional computational power over time. Hence, GPUs that support easy scaling simplify future upgrades.

Multi-GPU setups are another key consideration. These setups link multiple GPUs to work together on a single task. They are great for handling large-scale machine learning projects. When multiple GPUs share the workload, they significantly speed up the process. This means faster model training and quicker data analysis.

Here are points to remember about scalability and multi-GPU systems:

- Flexibility: A good GPU platform should allow adding more GPUs as needed. This keeps your system flexible and future-proof.

- Speed: Multi-GPU setups can process high volumes of data rapidly. This is crucial for resource-intensive ML tasks.

- Efficiency: Look for GPUs that communicate well with each other. Efficient data transfer between GPUs reduces bottlenecks.

- Software Support: Make sure the ML software you use can scale with multi-GPU setups. Not all ML frameworks manage multi-GPU configurations effectively.

Committing to a GPU setup for machine learning isn’t just about the present. It’s also about planning for future challenges. Invest in GPUs that will support your ML journey as it evolves. Whether it’s expanding your system or syncing multiple GPUs, think ahead. Make a choice that aligns with your long-term machine learning goals.

Cost-Efficiency Analysis

When investing in the best GPU for machine learning, cost-efficiency is a key factor. You want to ensure that your investment pays off over time. A cost-efficient GPU not only performs well but also keeps overheads low. This analysis helps you understand the long-term economic impact of your GPU purchase.

Total Cost of Ownership

The Total Cost of Ownership (TCO) includes the purchase price and all costs across the GPU’s life. These costs cover energy consumption, cooling systems, and maintenance. A GPU with a lower TCO is often more desirable as it can lead to substantial savings. To calculate TCO, add up the initial costs with the expected operational expenses over the GPU’s lifespan.

Performance per Dollar

Performance per dollar evaluates how much value you get from each dollar spent. Compare the processing speeds and memory sizes to their costs. Aim for a GPU that offers a good balance between cost and capabilities. This metric is essential for budget-conscious decisions, ensuring you don’t overspend on features you may not need.

Future Trends and Evolutions in Machine Learning GPUs

As we look towards the future, the evolution of GPUs in machine learning is undeniable. Technological advancements and growing demands shape this evolution. In the quest for the best GPU for machine learning, future trends play a crucial role. Here are some anticipated evolutions:

- Specialized AI Cores: We expect to see GPUs with cores specially designed for AI processes. These will likely offer more efficient model training and inferencing capabilities.

- Enhanced Memory Solutions: Tomorrow’s GPUs may come with even more memory and faster bandwidth. They could use new technologies like HBM (High Bandwidth Memory) to handle massive datasets.

- Energy Efficiency: Manufacturers will focus more on energy-efficient designs. This will reduce the operational costs of running machine learning models.

- Integrated Machine Learning Systems: GPUs might be part of all-in-one machine learning solutions. These systems will bundle software and hardware optimized for AI workloads.

- Quantum GPU Computing: We might see quantum computing principles applied to GPU architecture. This would revolutionize processing speeds and problem-solving abilities.

- AI-driven Optimization: Machine learning itself could be used to optimize GPU performance, making them smarter and more adaptive to tasks.

As these trends take shape, staying informed and adaptable is critical. Choose GPUs that are not just suitable for today’s needs but also prepared for tomorrow’s challenges in machine learning.