Part 1: Introduction to Modern GPU Architectures

Modern GPU architectures have evolved significantly over the years, from being primarily used for rendering graphics in video games to now being used in a wide range of applications, including machine learning, scientific simulations, and cryptography.

1. Increased Computational Power: The Engine of Innovation

Modern GPU architectures have experienced a remarkable evolution, leading to a significant increase in computational power. This advancement is primarily driven by the integration of multiple cores and the implementation of parallel processing techniques. By executing multiple tasks simultaneously, GPUs can handle complex computations and demanding workloads with unparalleled efficiency.

This surge in computational power has revolutionized various industries, from gaming and entertainment to scientific research and artificial intelligence. The ability of GPUs to process vast amounts of data in parallel has enabled breakthroughs in fields like machine learning, deep learning, and high-performance computing. As GPU technology continues to advance, we can expect even more powerful and versatile devices in the future.

2. Versatility in Applications: Beyond Gaming

Traditionally, GPUs were primarily used to render graphics for video games and other visual applications. However, their versatility has expanded significantly in recent years, making them indispensable tools for a wide range of applications.

Data Science and Machine Learning: GPUs have become essential tools for data scientists and machine learning engineers. Their ability to handle large datasets and perform complex calculations in parallel has accelerated the development of advanced algorithms and models.

Artificial Intelligence: GPUs are at the heart of artificial intelligence, powering tasks such as image and speech recognition, natural language processing, and autonomous vehicle technology.

High-Performance Computing: GPUs have emerged as a powerful tool for high-performance computing, enabling researchers to tackle complex simulations and scientific computations.

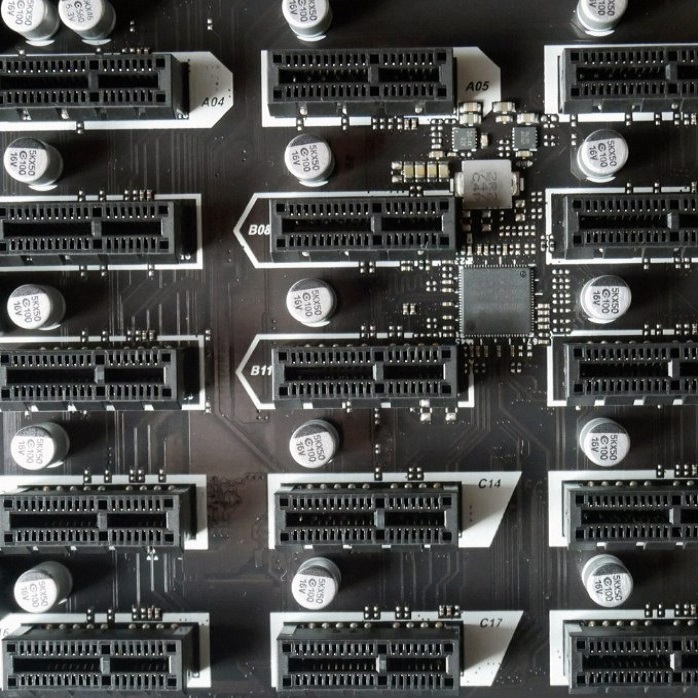

Cryptocurrency Mining: The computational power of GPUs has also made them valuable for cryptocurrency mining, although this application is evolving rapidly due to changing mining algorithms and increased competition.

As GPU technology continues to evolve, we can expect even more innovative and groundbreaking applications to emerge, pushing the boundaries of what is possible.

Part 2: Advancements in GPU Architecture

The advancements in GPU architecture have been driven by the need for more powerful and efficient computing systems. These advancements have led to the development of architectures that are not only capable of handling graphics processing but also excel in parallel processing and general-purpose computing.

1. Unified Memory Architecture: A Seamless Integration

One of the most significant advancements in modern GPU architecture is the introduction of unified memory. This innovative approach eliminates the traditional distinction between system memory (accessible by the CPU) and GPU memory (accessible by the GPU). Instead, it creates a single, unified memory pool that can be accessed by both the CPU and GPU.

The benefits of unified memory are numerous. By streamlining the data transfer process between the CPU and GPU, it significantly reduces latency and improves overall system performance. This is particularly advantageous for applications that require frequent data exchange, such as machine learning, scientific computing, and real-time graphics rendering.

Furthermore, unified memory simplifies programming and development. Developers no longer need to manage separate memory spaces for the CPU and GPU, leading to more efficient and streamlined code. This has made GPU programming more accessible to a wider range of developers, fostering innovation and accelerating the development of GPU-accelerated applications.

2. Specialized Cores: Tailored for Performance

In addition to unified memory, modern GPU architectures have incorporated specialized cores to optimize performance for specific tasks. These specialized cores are designed to handle specific types of computations, such as matrix multiplication, convolution, and tensor operations, with exceptional efficiency.

One notable example is tensor cores, which are specifically designed to accelerate deep learning workloads. By leveraging the power of matrix multiplication, tensor cores can significantly speed up the training and inference of deep neural networks. This has enabled researchers and developers to build more complex and sophisticated AI models, pushing the boundaries of artificial intelligence.

Other specialized cores have been developed to enhance the performance of specific applications. These include ray tracing cores and video encoding/decoding cores. These specialized cores, combined with unified memory, have significantly expanded the capabilities of modern GPUs. As a result, they have become essential tools for a wide range of industries.

Part 3: The Role of GPUs in Machine Learning and AI

The use of GPUs in machine learning and artificial intelligence has dramatically increased in recent years due to their ability to handle parallel processing and complex calculations efficiently. This has led to the development of specialized architectures and frameworks that are optimized for machine learning and AI workloads.

1. Parallel processing for training and inference:

GPUs excel in parallel processing, making them well-suited for training and running machine learning models. This has led to a significant improvement in the speed and efficiency of training models, leading to faster innovation and development in the field of AI.

2. Specialized architectures for deep learning:

With the rise of deep learning, GPU manufacturers have developed specialized architectures and hardware acceleration for deep learning workloads. This has further improved the performance and efficiency of deep learning tasks, allowing for more complex and accurate models to be developed.

Part 4: Applications of GPUs in Scientific Computing

The use of GPUs in scientific computing has opened up new possibilities for researchers and scientists, enabling them to perform complex simulations and data analysis at a much faster pace than before. This has led to significant advancements in fields such as physics, chemistry, and biology.

1. Accelerating simulations and data analysis:

The parallel processing capabilities of GPUs have greatly accelerated simulations and data analysis in scientific computing. This has made it possible for researchers to simulate more complex systems and analyze larger datasets, leading to deeper insights and discoveries.

2. Development of specialized libraries and frameworks:

The growing demand for GPU-accelerated scientific computing has led to the development of specialized libraries and frameworks. These are optimized for GPU architectures. These tools enable researchers to harness the power of GPUs for their specific scientific computing needs. This further improves the efficiency and speed of their work.

Part 5: Challenges and Limitations of Modern GPU Architectures

While modern GPU architectures have brought about significant advancements in computing, they also come with their own set of challenges and limitations that need to be addressed.

1. Power consumption and heat dissipation:

Modern GPUs are known for their high power consumption and heat dissipation, which can pose challenges in terms of energy efficiency and cooling in large-scale deployments. This has led to the need for more efficient cooling solutions and power management techniques to mitigate these challenges.

2. Programming complexities:

Developing applications that fully utilize the capabilities of modern GPU architectures can be complex and challenging. This requires specialized knowledge and expertise in parallel programming and optimizing code for parallel processing, which can be a barrier for many developers.

Part 6: Future Trends and Developments in GPU Architectures

Looking ahead, the future of GPU architectures holds great promise, with ongoing advancements and developments that are poised to further enhance the capabilities and potential of GPUs in various fields.

1. Continued focus on AI and machine learning:

GPU manufacturers will continue focusing on developing specialized architectures for AI and machine learning workloads. This will further improve the performance and efficiency of these tasks.

2. Integration with other computing technologies:

As computing technologies continue to evolve, we can expect to see greater integration between GPUs and other technologies. This includes FPGAs and ASICs, creating more powerful and versatile computing systems. These systems can handle a wide range of workloads.

In conclusion, modern GPU architectures have transformed computing significantly. They have enabled advanced graphics rendering and powered complex simulations and data analysis. With ongoing advancements, the potential of modern GPU architectures will continue to grow. This will lead to more powerful and versatile computing systems. These systems will be able to meet the demands of a wide range of applications.