Introduction to GPU Accelerated Computing

In the realm of computing, GPU accelerated computing stands as a significant advancement. It merges the capabilities of a Graphics Processing Unit (GPU) with a computer’s central processing unit (CPU) to enhance overall computing power. This synergy accelerates tasks, especially those requiring parallel processing, like image analysis or complex simulations. As technology progresses, understanding GPU accelerated computing becomes essential for developers and IT professionals.

Key Concepts and Evolution of GPUs

GPUs were initially crafted for rendering graphics. Their architecture makes them excellent at handling multiple operations simultaneously – a feature utilized beyond graphical tasks. The evolution of GPUs has been remarkable, transitioning from solely graphic-focused devices to powerful general-purpose processors. This expansion into General Purpose GPU (GPGPU) computing allows for diverse applications including scientific modeling and machine learning.

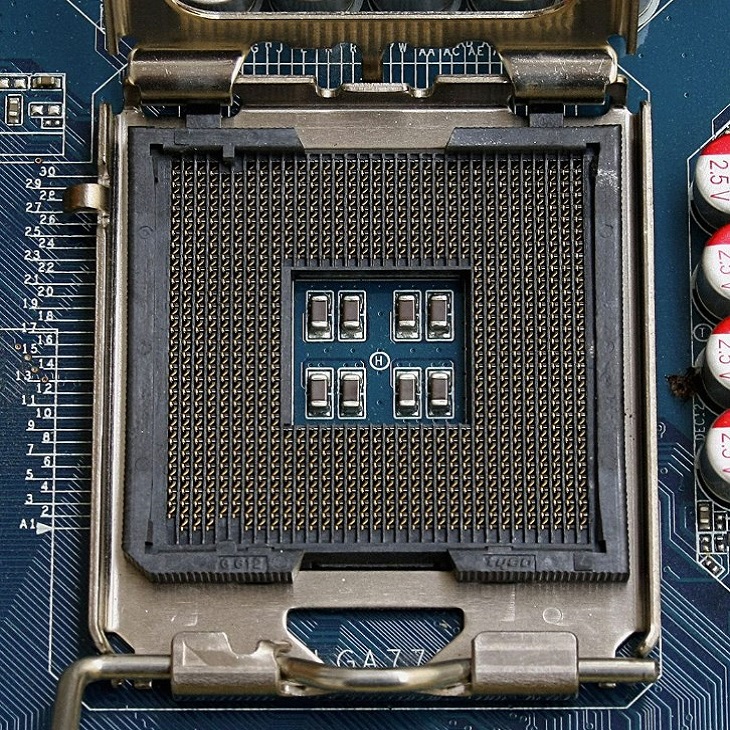

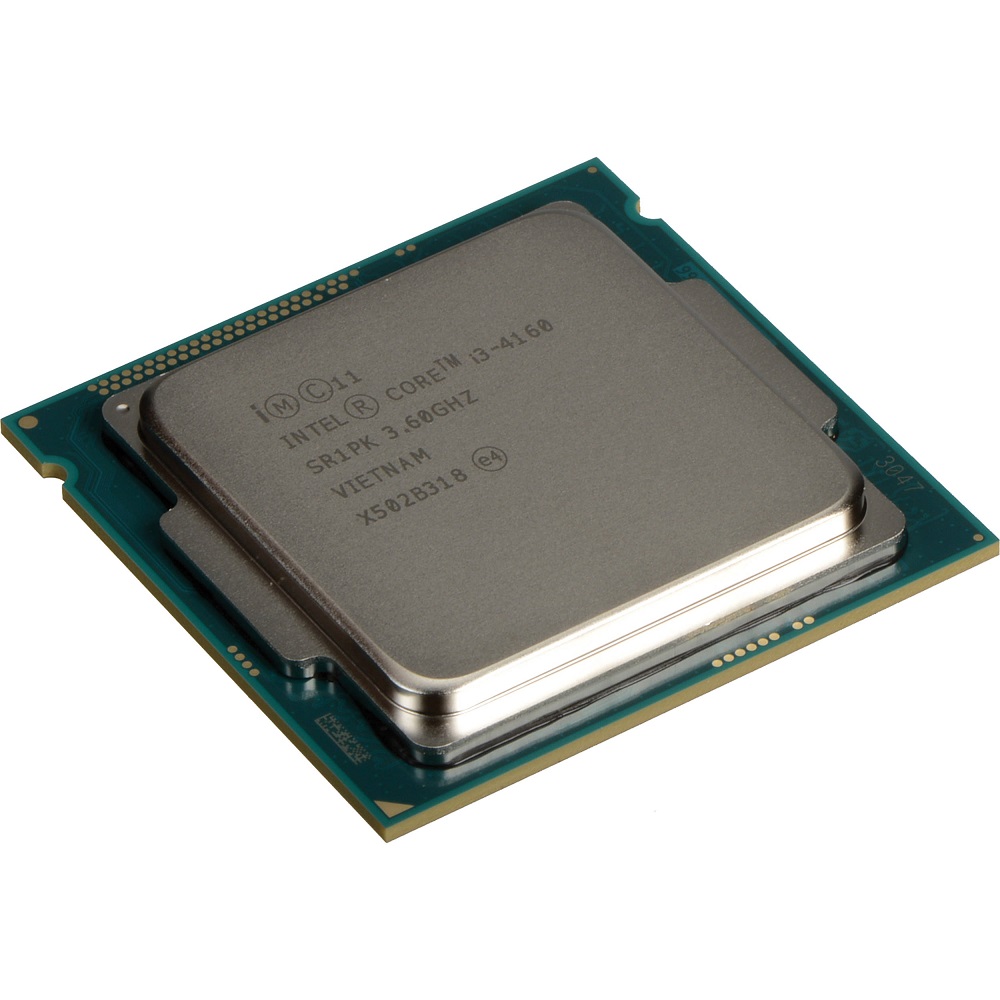

CPU vs. GPU: Understanding the Differences

At the core of understanding GPU accelerated computing is recognizing the differences between CPUs and GPUs. CPUs consist of a few cores optimized for sequential task execution, making them great for tasks that require decisions and complex logic. GPUs, on the other hand, consist of thousands of smaller cores. They excel at executing many tasks in parallel. This makes GPUs better suited for computational work that can be done in parallel, like matrix operations or data processing that doesn’t require sequential logic.

Programming APIs for GPU Acceleration

The power of GPU Acceleration is harnessed through specific programming APIs. These interfaces allow developers to allocate GPU resources effectively and write programs that can run on GPUs. Below we dive into the popular APIs that facilitate GPU programming.

Overview of CUDA: The Nvidia Parallel Computing Platform

Nvidia’s CUDA (Compute Unified Device Architecture) is a leader in GPU programming. Created in 2006, it gives developers access to a GPU’s virtual instruction set. This lets them execute compute kernels, or functions, across the GPU’s cores. It embraces the SIMD (Single Instruction, Multiple Data) methodology, ideal for tasks like scientific simulations. CUDA supports C/C++, with wrappers for other languages like Python, increasing its versatility.

OpenCL: The Open Standard for Heterogeneous Computing

OpenCL stands for heterogeneity in computing. It’s the open standard that allows code to run across a variety of processors like CPUs, GPUs, and even FPGAs. Created by the Khronos Group, this standard has wide industry support. OpenCL employs a C/C++ based language, with wrappers for bringing its capabilities to a broader audience across different programming environments.

OpenACC: Simplifying GPU Programming for Scientists and Engineers

For scientists and engineers, OpenACC offers a more streamlined GPU programming experience. It’s a high-level, directive-based standard that helps adapt code for heterogeneous computing. Without delving into the complexities of lower-level languages, it provides an easier path to accelerate programs using GPUs. OpenACC supports mainstream languages like C/C++ and Fortran, focusing on the computational demands of scientific research.

Practical Applications of GPU Programming

The increasing demand for computational power has meant that GPU programming has found many practical applications across various fields, revolutionizing the way complex tasks are approached and resolved.

Accelerated Scientific Computing

In scientific computing, simulations and models that once took days to compute can now be processed much faster using GPUs. Bioinformatics, astrophysics, and climate modeling are examples where the parallel processing strengths of GPUs significantly reduce computation times. Accelerated computations allow scientists to run more simulations, fine-tune models, and achieve results quicker than ever before.

Enhancements in Machine Learning Workflows

Machine learning, particularly deep learning, benefits greatly from GPU programming. Training neural networks is a resource-intensive task that GPUs handle well due to their ability to perform multiple operations concurrently. Consequently, GPUs are key to quicker model iterations, allowing for rapid enhancements and advances in AI and data analytics.

Real-time Data Processing and Analysis

For applications that require immediate insights, like financial trading algorithms or video processing, GPUs provide the ability to analyze and process large volumes of data in real-time. This is crucial in fields where timeliness is as important as accuracy, ensuring that decisions are made based on the most current data available.

GPU Programming with Python

GPU programming denotes harnessing a GPU’s parallel processing power for computational tasks. With Python being a widely-used language in various data-intensive domains, combining it with GPU acceleration has become increasingly popular.

Utilizing CUDA with Python for High-Performance Computing

Python developers can leverage CUDA for high-performance tasks. Using libraries that interface with CUDA allows Python code to execute operations on the GPU. This setup is optimal for complex computations that benefit from parallel execution, such as simulations and large-scale data analysis.

CUDA’s integration with Python facilitates the use of the GPU’s capabilities without needing in-depth knowledge of its architecture. This lowers the barrier to entry for Python developers to optimize their code for GPUs. By adopting CUDA, they tap into the speed and efficiency of parallel computing, leading to faster results and more efficient processing times.

Libraries and Frameworks: TensorFlow, PyTorch, and CuPy

For machine learning and neural networks, TensorFlow and PyTorch are top choices. They abstract the complexity of GPU programming, allowing researchers and engineers to focus on building and training models. These frameworks can automatically leverage GPU resources, which is crucial for handling the immense data requirements and computations involved in machine learning.

CuPy is another powerful library that acts as a drop-in replacement for NumPy, enabling seamless transition of array-based computations to the GPU. Developers familiar with NumPy can, therefore, easily scale up the performance of their applications without having to rewrite their codebase.

In summary, the use of CUDA with Python and its associated libraries transforms the performance of data processing and machine learning workloads, underscoring the importance of GPUs in current computational practices.

Writing Custom GPU-Accelerated Algorithms

When off-the-shelf solutions won’t fit the bill for specific computational problems, writing custom GPU-accelerated algorithms is the way to go. Python developers, in particular, can access powerful tools designed for crafting algorithms that fully exploit the GPU’s parallel processing capabilities. By writing custom GPU code, you can fine-tune performance and tackle unique computational challenges. Let’s explore the tools and methods available for Python programmers jumping into custom GPU-accelerated algorithm development.

Introduction to NUMBA for Python Developers

Numba is a just-in-time compiler that turns Python functions into optimized machine code at runtime. It’s a go-to tool for Python users seeking to write high performance functions without leaving the comfort of Python’s syntax. With Numba, you can parallelize your Python code and specifically target GPU execution. Start by marking functions with decorators to tell Numba where to focus its optimization efforts. Once set up, Numba compiles your functions to run on the GPU, leading to substantial gains in processing speed.

Achieving Optimal Performance with PyCUDA

For Python developers needing maximum control over their GPU programming, PyCUDA provides the solution. It’s a module that bridges Python with CUDA, offering deep integration with the GPU’s architecture. With PyCUDA, you’re closer to the metal, which means you have the power to manage memory, optimize thread usage, and fine-tune kernel executions. While more involved than high-level tools like NUMBA, PyCUDA allows for a precise approach to GPU programming. You can optimize your algorithm down to the smallest detail and achieve the best possible performance.

Setting Up Your GPU Programming Environment

To begin with GPU programming, you need the right environment. A crucial part of that is CUDA installation, which enables your programs to interact with the GPU. Here, we provide a simple guide to help you get your GPU programming environment up and running, especially on Ubuntu systems.

Installing CUDA on Ubuntu 20.04

To install CUDA on Ubuntu 20.04, you follow these steps. First, you must download the CUDA toolkit from Nvidia’s official site. Then you open your terminal and execute the commands that Nvidia provides. The process involves setting up the repository, installing the key and the toolkit, and configuring your system to use the GPU. Once installed, you should confirm that the GPU is visible to your system using the ‘nvidia-smi’ command. This ensures your setup is correct.

Remember, your GPU must be CUDA-capable for this to work. Also, CUDA comes with different versions, so pick one that matches your GPU and needs. Check Nvidia’s documentation for more details.

Benchmarking Performance: CPU vs. GPU

After setting up, you might want to compare CPU and GPU performances. Benchmarking helps you understand the speed gain from using GPU acceleration. For this, you write a small Python script. The script should use two libraries, NumPy for CPU computations and CuPy for GPU computations.

You’ll create large matrices first. Then, you’ll run the same operations using NumPy and CuPy and measure the time taken for each. This comparison should show a significant time reduction when using the GPU.

This exercise gives you a clear idea of when and why to use GPU acceleration. If your operations don’t show much improvement, it could be that they do not benefit from parallel execution.

To conclude, setting up your GPU programming environment is the first step towards faster and more effective computing. By installing CUDA and benchmarking, you prepare yourself to dive into the world of GPU accelerated computations.

The Future of GPU Programming

As GPU programming continues to evolve, it is shaping the future of computing. Increased efficiency and capabilities of GPUs make them essential in advanced computing tasks. Anticipating the future requires understanding the trends in GPU technology and its direction.

Trends and Predictions in GPU Technologies

GPU technologies are advancing quickly, spurred by demands for more power and efficiency. Several trends stand out. These include more cores, faster memory, and specialized features for AI and machine learning. We expect GPUs to push the boundaries of deep learning, facilitating breakthroughs in fields like natural language processing and autonomous vehicles.

GPUs will likely become more energy-efficient, reducing the carbon footprint of data centers. As AI models grow, energy-efficient GPUs will be crucial. We predict wider adoption of GPUs in cloud computing, offering scalable GPU power to more users.

Advancements in software will complement hardware improvements. Programming tools and libraries will become more user-friendly, opening GPU programming to a broader audience.

Making the Choice: When to Opt for GPU Acceleration

Deciding when to use GPU acceleration depends on the task. For parallelizable work, like large data analysis or simulations, GPUs are a smart choice. They process these tasks much faster than CPUs.

If computational tasks increase in complexity and size, consider GPUs. They excel at handling massive, repetitive computations. When dealing with smaller tasks or those needing sequential processing, CPUs may suffice.

In summary, GPU acceleration is key when speed and processing power are at a premium. As GPU technologies advance, they will become even more integral to computing across industries.